LLM Quant Revolution: Can AI Improve Investment Decision-Making in Systematic Trading?

There’s no doubt - AI is rapidly reshaping quantitative finance. But can it truly enhance our investment decision-making and drive better trading results?

Here’s my summary of the current state and future trajectory of LLMs in quantitative finance, covering:

The state of LLM technology in financial applications

A comparison of leading models and their strengths/weaknesses

Implementation frameworks for deploying LLMs in systematic investing

Risk management strategies for production-level AI usage

Key Takeaways for Quantitative Investors

Successfully integrating LLMs into systematic trading depends on how they are selected, combined, and deployed. Below are the most important considerations:

1. Multi-Model Strategies Outperform Single-Model Approaches

Combining models like GPT-4, BloombergGPT (soon available as QuantJourneyGPT), and DeepSeek enhances accuracy and reliability.

No single LLM excels at everything, so hybrid approaches improve robustness.

2. Production-Ready AI Requires Rigorous Quality Control

AI-driven trading needs structured validation pipelines, such as Retrieval-Augmented Generation (RAG) for filtering unreliable outputs.

Systems must be repeatable and error-resistant, with human oversight ensuring reliability.

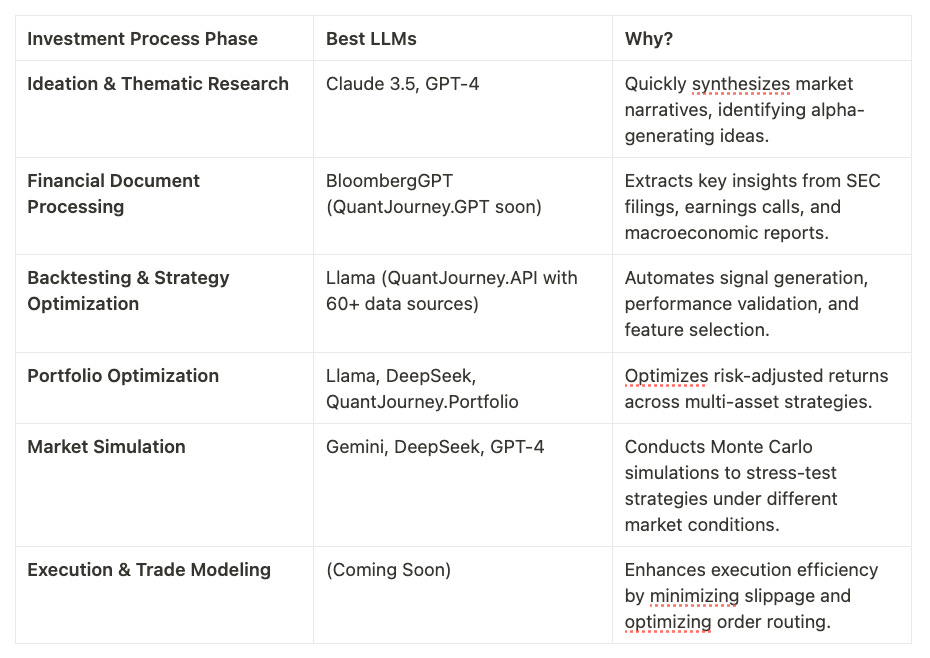

3. Task-Specific Model Selection Enhances Alpha Generation

The right model depends on the investment process phase (e.g., research, backtesting, execution).

Matching LLMs to specific investment tasks boosts efficiency and predictive power.

The Role of LLMs in Quantitative Investing

LLMs are not just research tools - they can automate key components of the investment process, replacing legacy NLP tools and streamlining research, data processing, and trade execution. They allow for:

Automated Market Research - Parsing millions of financial reports, earnings calls, and macro trends in seconds.

Code Generation & Debugging - Writing optimised, semi-production-ready Python for strategy testing.

Data Transformation & Feature Engineering - Structuring complex datasets into alpha signals.

However, effective use of LLMs in finance requires structured pipelines, multi-step quality control, and error mitigation strategies to prevent overfitting, hallucinations, and execution errors.

Best LLMs for Each Investment Phase

QuantJourney has the infrastructure to integrate multiple LLMs into a production-grade quant system. The best LLMs for different phases of the investment process include:

QuantJourney is developing an AI-driven investment workflow to enhance efficiency and automation.

Challenges in Deploying LLMs for Systematic Trading

While AI provides powerful capabilities, its use in live trading environments introduces risks that must be carefully managed.

1. Execution Risk & Data Integrity

Problem: AI can produce misleading insights, impacting investment decisions.

Solution: Real-time retrieval (RAG) frameworks ensure accurate, up-to-date market intelligence.

2. Model Drift & Non-Deterministic Outputs

Problem: The same prompt can generate different outputs, which is unacceptable in production trading.

Solution: Cross-validation with multiple models guarantees consistent and reliable signals before execution.

3. Lookahead Bias & Compliance Risks

Problem: Data leakage in AI-driven backtesting can produce inflated results.

Solution: Strict model governance ensures LLMs follow predefined research pipelines without introducing bias.

QuantJourney’s AI-Powered Investment Framework

Unlike most traders who use generic AI models, QuantJourney is actively building an AI-powered investment framework, focusing on backtesting, portfolio management, and execution - features that are currently under development:

✔ On-Demand backtesting at top speed

AI-enhanced strategy generation

Instant backtesting across multi-asset portfolios

Multi-model comparison for robust insights

✔ Seamless integration with quantitative trading pipelines

LLM-driven data ingestion and feature engineering

Automated Python script generation for portfolio modeling

Plug-and-play LLM API orchestration for strategy execution

The use of RAG-Based solutions

A Retrieval-Augmented Generation (RAG) framework is essential for ensuring LLM outputs remain consistent, relevant, and production-ready.

How it Works in Finance:

Data Ingestion & Context Retrieval:

LLMs retrieve validated financial datasets (earnings reports, macro indicators, risk models).

LLM Processing & Generation:

Model interprets data and provides insights, constrained by investment rules.

Quality Control & Risk Checks:

Multi-step verification filters hallucinations and detects anomalies.

Final Execution Layer:

Validated output is integrated into the investment pipeline.

By implementing this structured workflow, QuantJourney aims to develop robust AI-driven decision-making tools while minimising execution risk.

Final Thoughts: Why LLMs Will Not Replace Human Judgment

Despite their power, LLMs should not be used as black-box decision engines. Instead, they should serve as enhanced research tools, helping quants and traders:

Generate higher-quality insights

Automate repetitive tasks

Reduce research time

However, final trading decisions must remain under human oversight, with robust AI validation systems in place.

🚀 The Future of Quantitative Investing

Multi-model AI pipelines will become the industry standard for systematic investing.

Quant funds will increasingly leverage LLMs for research, risk management, and execution modeling.

The best AI trading frameworks will balance automation with human discretion - exactly what QuantJourney is currently building.

Conclusion

QuantJourney is actively building a high-performance infrastructure for LLM-driven investment processes, with several integration points already in place:

Automating investment research with intelligent LLM pipelines

Enhancing backtesting frameworks with AI-driven strategy generation

Deploying cutting-edge AI models for real-time trade execution

Happy trading!

Jakub